Some History

In days of yore, a foundry would pour molten lead into molds to cast type. Today, font foundries pour molten pixels into computer outlines to create electronic fonts. Describing fonts as outlines allows one font description to produce fonts for many devices of different resolutions.

Of all fonts, the lowliest is the bitmapped screen font. These can be derived from parent bitmapped fonts. They can also be created in their own right.

Electronic font characters, or glyphs, are ordered by assigning each a numeric code. In Unicode, these numeric assignments are referred to as code points.

The most common computer character code historically was the American Standard Code for Information Interchange (ASCII). This is a seven-bit code that fits conveniently into an eight-bit byte, with one bit left over for parity. This parity bit was often used for modem communications over noisy phone lines. Modern higher-speed modem protocols employ error checking and correction techniques that make use of a parity bit a thing of the past. Thus newer technology allows use of all eight bits in a byte for encoding character data, even over noisy communications lines. One eight-bit byte can encode the numbers 0 through 255, inclusive.

ASCII specifies 96 printable characters (including Space and Delete) plus 32 control characters, for a total of 128 character codes. ISO 8859-1 adopts the entire ASCII character set into the lower 128 byte values, and uses the eigth bit in a byte to represent 128 additional characters. The most notable addition to ASCII in ISO 8859-1 is accented Latin characters to support most West European alphabets.

Many coding schemes have existed for other scripts. In general, Unicode adopted these coding schemes where it made sense by fitting them into a portion of the total Unicode encoding space.

Brain Turbocharger Alert

Unicode specifies its character code points using hexadecimal, an esoteric computer counting scheme traditionally the domain of software and hardware engineers, not graphic artists. Bear with the discussion of bits and bytes and "hex" (oh my!) below, and you'll be able to speak in Unicode with the best of 'em. You'll also know how to represent a Unicode value in a web page and elsewhere.

One Code to Rule Them All

And In the Bit Stream Bind Them

(With apologies to J.R.R. Tolkien.)

Unicode is revolutionizing the international computing environment.

It has broken the one-byte barrier to allow representation of more

international and historical scripts than the world has ever seen

in earlier computing standards.

Its impact is so great that the ISO has ceased all work on their

8859 standard series to concentrate efforts on Unicode.

Today the ISO/IEC 10646 standard follows the Unicode standard.

In stark contrast to the 128 character codes of ASCII and the 256 character codes of ISO 8859-1, Unicode allows for over one million characters.

The ISO 8859-1 set of 256 character codes forms the first 256 Unicode code points and as mentioned previously, the first 128 character codes of ISO 8859-1 are identical to the 128 ASCII character codes. This provides some degree of backwards compatibility from Unicode to ASCII and ISO 8859-1.

Unicode Terminology: Unicode refers to its numeric assignments as code points. A character can be composed from one or more sequential code points. A code point can be unassigned. A code point also can be assigned to something other than a printing character, such as the special Byte Order Mark (BOM) described below.

Unicode divides its encoding into planes. Each plane has encodings for two-byte (16 bit) values. This requires twice as much storage space as the simpler one-byte ASCII or ISO-8859-1 encoding schemes.

How many code points can we represent in one Unicode plane of 16 bits?

Each binary bit can represent two values (0 or 1). Two bits can represent up to 2 × 2, or 4 values. Three bits can represent up to 2 × 2 × 2, or 8 values. Another way of writing this is 2 to the power of 3, or 2^3, where 2^3 = 2 × 2 × 2, or 8. Four bits can represent 2^4 = 2 × 2 × 2 × 2 = 16 values. Notice that 2^4 = 2^2 × 2^2.

Likewise, 8 bits can represent 2^8 = 2^4 × 2^4 = 16 × 16 = 256 values, and 16 bits can represent 2^16 = 2^8 × 2^8 = 256 × 256 = 65,536 values.

With 16 bits per Unicode plane, each plane therefore has room to represent up to 65,536 possible code points.

By using twice the space per code point of older one-byte codes, the very first Unicode plane (plane 0) has space for most of the world's modern scripts. Using twice the storage of older standards is a small price to pay for international language support. Today, most web browsers support Unicode as the default encoding scheme, as does more and more software.

The earliest versions of the Unicode Standard used a 16 bit code point; thus the original standard only covered what today is referred to as Plane 0. This was enough for most of the world's modern scripts. One notable exception, however, was rare Chinese ideographs. There are well over 65,536 Chinese ideographs alone.

Unicode only uses the first 17 planes. Thus the current Unicode standard allows for encoding up to 17 × 65,536 = 1,114,112 code points.

A Hex Upon Thee!

Here it is...the hexadecimal hump. I'll try to make it short and sweet.

If you're just plain folk, you count in decimal. There are 10 decimal digits: 0 through 9.

Computers, on the other hand, count in binary. One binary digit has two possible values (hence the name "binary"): 0 and 1. These two values can be thought of as an electronic switch (or memory location) being on or off.

If we were to take an ordinary decimal number and write it in binary as a string of ones and zeroes, it would take approximately (not exactly) three times as many digits to write as a decimal number.

Binary numbers can be written more efficiently by grouping them into clusters of four bits. We saw previously that a four bit number can store up to 2^4 = 16 values. Hexadecimal (from hexa-, meaning "six", and decimal, meaning "ten") numbers have 16 values per digit. These digits are: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, and F. The letters in hexadecimal notation can be written as upper-case or lower-case letters. The convention in the Unicode Standard is to write them as upper-case letters.

We saw above that Unicode has defined code point assignments for the first 17 planes. These are planes 0 through 16 decimal. In hexadecimal, the first 17 planes are: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F, and 10. A "10" in hexadecimal means one 16 plus zero ones.

Incidentally, notice that computers like to begin counting at zero. You can think of this as a state where a series of digital on/off switches or memory locations are all in the "off" state.

Four binary bits are represented by exactly one hexadecimal digit. Likewise, an eight bit byte is represented by exactly 8 / 4 = 2 hexadecimal digits with a range of "00" through "FF".

So we can represent a byte value as exactly two hexadecimal digits — everything works out just right.

A four-bit half of a byte is often referred to as a "nybble" or "nibble", which is represented by exactly one hexadecimal digit.

A 16 bit (two byte) number can be written as exactly 16 / 4 = 4 hexadecimal digits, from "0000" through "FFFF". This is the range of hexadecimal values of Unicode code points in each Unicode plane.

Hexadecimal numbers are written so that the reader will understand that the values are in hexadecimal, not in some other counting scheme (such as decimal). The Unicode convention is to write "U+" followed by the hexadecimal code point value, for example "U+F567". One other common practice (there are more, as you'll see later) that also appears in the Unicode standard is to write "16" as a subscript after a hexadecimal number, for example F56716. This denotes that the number F567 is in base 16.

By convention, Unicode code points in the Basic Multilingual Plane (plane 0) are written as "U+" followed by four hexadecimal digits. Code points in higher planes are written using the plane number in hexadecimal followed by the value within the plane. The maximum value of a Unicode code point, with 17 planes defined, is "U+10FFFF".

As an example, the Unicode value of an upside-down question mark could be written as U+0000BF (six hexadecimal digits), or simply U+00BF (four hexadecimal digits). Writing U+BF (two hexadecimal digits) is not common practice, nor is writing an odd number of hexadecimal digits.

The Plane Facts

The 17 Unicode planes are currently assigned as follows:

| Plane | Use |

|---|---|

| 0 | Basic Multilingual Plane |

| 1 | Supplementary Multilingual Plane (historical scripts) |

| 2 | Supplementary Ideographic Plane (Chinese/Japanese/Korean ideographs Extension B) |

| 3–D | Unassigned |

| E | Supplementary Special Purpose Plane |

| F | Private Use |

| 10 | Private Use |

Private Use areas can be assigned any desired custom glyphs. There are Private Use planes, as well as Private Use code points within the Basic Multilingual Plane.

Within the Basic Multilingual Plane (Plane 0, the plane covered by the GNU Unifont), scripts are allocated the following ranges in Unicode as of version 5.1, released in April 2008:

| Start | End | Use |

|---|---|---|

| U+0000 | U+007F | C0 Controls and Basic Latin (ASCII) |

| U+0080 | U+00FF | C1 Controls and Latin-1 Supplement [ISO-8859-1] |

| U+0100 | U+017F | Latin Extended – A |

| U+0180 | U+024F | Latin Extended – B |

| U+0250 | U+02AF | International Phoenetic Alphabet Extensions |

| U+02B0 | U+02FF | Spacing Modifier Letters |

| U+0300 | U+036F | Combining Diacritical Marks |

| U+0370 | U+03FF | Greek and Coptic |

| U+0400 | U+04FF | Cyrillic |

| U+0500 | U+052F | Cyrillic Supplement |

| U+0530 | U+058F | Armenian |

| U+0590 | U+05FF | Hebrew |

| U+0600 | U+06FF | Arabic |

| U+0700 | U+074F | Syriac |

| U+0750 | U+077F | Arabic Supplement |

| U+0780 | U+07BF | Thaana |

| U+07C0 | U+07FF | N'Ko |

| U+0800 | U+08FF | Unassigned |

| U+0900 | U+097F | Devanagari |

| U+0980 | U+09FF | Bengali |

| U+0A00 | U+0A7F | Gurmukhi |

| U+0A80 | U+0AFF | Gujarati |

| U+0B00 | U+0B7F | Oriya |

| U+0B80 | U+0BFF | Tamil |

| U+0C00 | U+0C7F | Telugu |

| U+0C80 | U+0CFF | Kannada |

| U+0D00 | U+0D7F | Malayalam |

| U+0D80 | U+0DFF | Sinhala |

| U+0E00 | U+0E7F | Thai |

| U+0E80 | U+0EFF | Lao |

| U+0F00 | U+0FFF | Tibetan |

| U+1000 | U+109F | Myanmar |

| U+10A0 | U+10FF | Georgian |

| U+1100 | U+11FF | Hangul Jamo |

| U+1200 | U+137F | Ethiopic |

| U+1380 | U+139F | Ethiopic Supplement |

| U+13A0 | U+13FF | Cherokee |

| U+1400 | U+167F | Unified Canadian Aboriginal Syllabics |

| U+1680 | U+169F | Ogham |

| U+16A0 | U+16FF | Runic |

| U+1700 | U+171F | Tagalog |

| U+1720 | U+173F | Hanunoo |

| U+1740 | U+175F | Buhid |

| U+1760 | U+177F | Tagbanwa |

| U+1780 | U+17FF | Khmer |

| U+1800 | U+18AF | Mongolian |

| U+18B0 | U+18FF | Unassigned |

| U+1900 | U+194F | Limbu |

| U+1950 | U+197F | Tai Le |

| U+1980 | U+19DF | New Tai Lue |

| U+19E0 | U+19FF | Khmer Symbols |

| U+1A00 | U+1A1F | Buginese |

| U+1A20 | U+1AFF | Unassigned |

| U+1B00 | U+1B7F | Balinese |

| U+1B80 | U+1BBF | Sundanese |

| U+1BC0 | U+1BFF | Unassigned |

| U+1C00 | U+1C4F | Lepcha |

| U+1C50 | U+1C7F | Ol Chiki |

| U+1C80 | U+1CFF | Unassigned |

| U+1D00 | U+1D7F | Phonetic Extensions |

| U+1D80 | U+1DBF | Phonetic Extensions Supplement |

| U+1DC0 | U+1DFF | Combining Diacritical Marks Supplement |

| U+1E00 | U+1EFF | Latin Extended Additional |

| U+1F00 | U+1FFF | Greek Extended |

| U+2000 | U+206F | General Punctuation |

| U+2070 | U+209F | Superscripts and Subscripts |

| U+20A0 | U+20CF | Currency Symbols |

| U+20D0 | U+20FF | Combining Diacritical Marks for Symbols |

| U+2100 | U+214F | Letterlike Symbols |

| U+2150 | U+218F | Number Forms |

| U+2190 | U+21FF | Arrows |

| U+2200 | U+22FF | Mathematical Operators |

| U+2300 | U+23FF | Miscellaneous Technical |

| U+2400 | U+243F | Control Pictures |

| U+2440 | U+245F | Optical Character Recognition |

| U+2460 | U+24FF | Enclosed Alphanumerics |

| U+2500 | U+257F | Box Drawing |

| U+2580 | U+259F | Block Elements |

| U+25A0 | U+25FF | Geometric Shapes |

| U+2600 | U+26FF | Miscellaneous Symbols |

| U+2700 | U+27BF | Dingbats |

| U+27C0 | U+27EF | Miscellaneous Mathematical Symbols – A |

| U+27F0 | U+27FF | Supplemental Arrows – A |

| U+2800 | U+28FF | Braille Patterns |

| U+2900 | U+297F | Supplemental Arrows – B |

| U+2980 | U+29FF | Miscellaneous Mathematical Symbols – B |

| U+2A00 | U+2AFF | Supplemental Mathematical Operators |

| U+2B00 | U+2BFF | Miscellaneous Symbols and Arrows |

| U+2C00 | U+2C5F | Glagolitic |

| U+2C60 | U+2C7F | Latin Extended – C |

| U+2C80 | U+2CFF | Coptic |

| U+2D00 | U+2D2F | Georgian Supplement |

| U+2D30 | U+2D7F | Tifinagh |

| U+2D80 | U+2DDF | Ethiopic Extended |

| U+2DE0 | U+2DFF | Cyrillic Extended – A |

| U+2E00 | U+2E7F | Supplemental Punctuation |

| U+2E80 | U+2EFF | CJK (Chinese/Japanese/Korean) Radicals Supplement |

| U+2F00 | U+2FDF | Kangxi Radicals |

| U+2FE0 | U+2FEF | Unassigned |

| U+2FF0 | U+2FFF | Ideographic Description Characters |

| U+3000 | U+303F | CJK Symbols and Punctuation |

| U+3040 | U+309F | Hiragana |

| U+30A0 | U+30FF | Katakana |

| U+3100 | U+312F | Bopomofo |

| U+3130 | U+318F | Hangul Compatibility Jamo |

| U+3190 | U+319F | Kanbun |

| U+31A0 | U+31BF | Bopomofo Extended |

| U+31C0 | U+31EF | CJK Strokes |

| U+31F0 | U+31FF | Katakana Phonetic Extensions |

| U+3200 | U+32FF | Enclosed CHK Letters and Months |

| U+3300 | U+33FF | CJK Compatibility |

| U+3400 | U+4DBF | CJK Unified Ideographs Extension A |

| U+4DC0 | U+4DFF | Yijing Hexagram Symbols |

| U+4E00 | U+9FCF | CJK Unified Ideographs |

| U+9FD0 | U+9FFF | Unassigned |

| U+A000 | U+A48F | Yi Syllables |

| U+A490 | U+A4CF | Yi Radicals |

| U+4D0 | U+A4FF | Unassigned |

| U+A500 | U+A63F | Vai |

| U+A640 | U+A69F | Cyrillic Extended – B |

| U+A6A0 | U+A6FF | Unassigned |

| U+A700 | U+A71F | Modifier Tone Letters |

| U+A720 | U+A7FF | Latin Extended – D |

| U+A800 | U+A82F | Syloti Nagri |

| U+A830 | U+A83F | Unassigned |

| U+A840 | U+A87F | Phags-pa |

| U+A880 | U+A8DF | Saurashtra |

| U+A8E0 | U+A8FF | Unassigned |

| U+A900 | U+A92F | Kayah Li |

| U+A930 | U+A95F | Rajang |

| U+A960 | U+A9FF | Unassigned |

| U+AA00 | U+AA5F | Cham |

| U+AA60 | U+ABFF | Unassigned |

| U+AC00 | U+D7AF | Hangul Syllables |

| U+D7B0 | U+D7FF | Unassigned |

| U+D800 | U+DBFF | High Surrogate (first half of non-BMP character) |

| U+DC00 | U+DFFF | Low Surrogate (second half of non-BMP character) |

| U+E000 | U+F8FF | Private Use Area |

| U+F900 | U+FAFF | CJK Compatibility Ideographs |

| U+FB00 | U+FB4F | Alphabetic Presentation Forms |

| U+FB50 | U+FDFF | Arabic Presentation Forms – A |

| U+FE00 | U+FE0F | Variation Selectors |

| U+FE10 | U+FE1F | Vertical Forms |

| U+FE20 | U+FE2F | Combining Half Marks |

| U+FE30 | U+FE4F | CJK Compatibility Forms |

| U+FE50 | U+FE6F | Small Form Variants |

| U+FE70 | U+FEFF | Arabic Presentation Forms – B |

| U+FF00 | U+FFEF | Halfwidth and Fullwidth Forms |

| U+FFF0 | U+FFFF | Specials |

UTF-32

The simplest way to represent all possible Unicode code points is with a 32 bit number. Most computers today are based on a 32 bit or 64 bit architecture, so this allows computers to manipulate Unicode values as a whole computer "word" of 32 bits on 32 bit architectures, or as a half computer "word" of 32 bits on 64 bit architectures.

This format is known as UTF-32 (Unicode Transformation Format, 32 bits).

Although UTF-32 allows for fast computation on 32 bit and 64 bit computers, it uses four bytes per code point. If most or all code points in a document fall within the BMP (the Basic Multilingual Plane, or Plane 0), then UTF-32 will consume about twice as much space per code point as would the more space-efficient UTF-16 format discussed below.

UTF-16

UTF-16 encodes Unicode code points as one or two 16 bit values. Any code point within the BMP is represented as a single 16 bit value. Code points above the BMP are broken into an upper half and a lower half, and represented as two 16 bit values. The method (or algorithm) for this is described below.

The highest Unicode code point value possible with the current standard is U+10FFFF, which requires 21 bits to represent. As we're about to see, this can be manipulated to fit very neatly into UTF-16 encoding, with not a bit to spare.

Unicode has 17 planes, which we can write as ranging from 0x00 through 0x10. The "0xnnnn" notation is a convention from the C computer language, and denotes that the number following the "0x" is hexadecimal. Chances are you'll run across this form of hexadecimal representation sometime if you're working with Unicode.

If we know that the plane of the current code point is beyond the BMP, then the plane number must be in the range 0x01 through 0x10. If we subtract 1 from the plane number, the resulting adjusted range will be 0x0 through 0xF — this range fits exactly in one hexadecimal digit.

What's the point? Well, if we know that a Unicode value is beyond the BMP, then it has a value of at least 0x010000 and at most 0x10FFFF. If we subtract 0x10000 from this number, then the resulting number will have the range 0x00000 through 0xFFFFF, which takes exactly 5 × 4 = 20 bits to represent.

In UTF-16 representation, we take that resulting 20 bit number and divide it into an upper 10 bits and a lower 10 bits. In order to examine bits further, we'll have to cover some binary notation. The table below shows the four binary digit value of each hexadecimal digit.

| Decimal | Hexadecimal | Binary |

|---|---|---|

| 0 | 0 | 0000 |

| 1 | 1 | 0001 |

| 2 | 2 | 0010 |

| 3 | 3 | 0011 |

| 4 | 4 | 0100 |

| 5 | 5 | 0101 |

| 6 | 6 | 0110 |

| 7 | 7 | 0111 |

| 8 | 8 | 1000 |

| 9 | 9 | 1001 |

| 10 | A | 1010 |

| 11 | B | 1011 |

| 12 | C | 1100 |

| 13 | D | 1101 |

| 14 | E | 1110 |

| 15 | F | 1111 |

You can use this table to convert hexadecimal digits to and from a binary string of four bits.

After splitting the 20 bit Unicode code point into an upper and lower 10 bits, the uppper 10 bits is added to 0xD800. The resulting number will be in the range of 0xD800 through 0xDBFF. This resulting value is called the high surrogate.

The lower 10 bits is added to 0xDC00. The resulting number will be in the range of 0xDC00 through 0xDFFF. This resulting value is called the low surrogate.

The UTF-16 encoded value of a code point beyond plane 0 is then written as two 16 bit values: the high surrogate followed by the low surrogate.

Note that UTF-32 has no need for surrogate pairs because each code point is stored as a 32 bit number, whether it is a 7 bit ASCII value or a full 21 bit code point in plane 17.

Gulliver's Travels for the New Millenium

UTF-32 and UTF-16 can be handled efficiently internally on a computer, but they share one drawback: a computer encoding a 32 bit or 16 bit value will use one particular byte ordering (known as "big endian" or "little endian", after the rivalling factions in Gulliver's Travels). Some computers store the most significant byte first; some store the most significant byte last.

Without getting too side-tracked by a discussion on endian-ness, know that Windows PCs based on Intel processors use the opposite byte ordering of Motorola and PowerPC processors on Macintosh computers. Therefore this is a very real problem for information exchange that can't be overlooked.

If data is exchanged with another computer, some guarantees must exist so that the other computer is either able to determine the byte order or is using the same byte order as the original computer. In short, UTF-32 and UTF-16 are not byte order independent.

The chosen solution is to begin a UTF-32 or UTF-16 file with a special code point called the Byte Order Mark (BOM). Inserting a BOM at the beginning of a file allows the receiving computer to determine whether or not it must flip the byte ordering for its own architecture.

The BOM has code point U+FEFF. If a receiving computer has the opposite byte ordering as the transmitting computer, it will receive this as FFFE16, because the bytes 0xFE and 0xFF will be swapped. U+FFFE just so happens to be an invalid Unicode character. The receiver can use this BOM to determine whether or not the bytes in a document must be swapped.

The UTF-32 encoding of U+FEFF is 0000FEFF16. If received by a computer with the opposite byte ordering, the receiver will read this as FFFE000016. This is above the maximum Unicode code point of 0010FFFF16. The receiver can therefore determine that it must flip the byte ordering to read the file.

UTF-8

There is another solution to the big endian versus little endian debate (and it isn't Gulliver's solution of cracking eggs in the middle). UTF-8, as its name implies, is based on handling eight bits (one byte) at a time. Because UTF-8 always handles Unicode values one byte at a time, it is byte order independent. UTF-8 can therefore be used to exchange data among computers no matter what their native byte ordering is. For this reason, UTF-8 is becoming the de facto standard for encoding web pages.

UTF-8 was created by Ken Thompson at Bell Labs for the "Plan 9 from Bell Labs" operating system (named after the movie "Plan 9 from Outer Space", possibly the worst low-budget science fiction movie of all time). Ken Thompson wanted an encoding scheme for his new operating system that was backwards-compatible with seven-bit ASCII characters but that could be extended to cover an arbitrarily large character set. What he developed has been adopted by the Unicode consortium as UTF-8.

He took advantage of ASCII being a seven bit code, with the eighth bit always equal to zero. In UTF-8, if the high-order bit is a zero, then the low-order seven bits are treated as an ordinary ASCII character. If a document only contains ASCII characters, it is already in UTF-8 format.

If the eighth bit of a byte is set to a one at the start of a new code point, the byte must begin a multi-byte code point sequence in UTF-8 as follows. The first byte in a multi-byte sequence begins with one '1' bit for each byte in the transformed code point, followed by a '0' bit. For example, if a code point is encoded as three UTF-8 bytes, then the highest byte must begin in binary as '1110', with the high-order four bits of the code point following to make up the rest of the byte.

Any subsequent bytes must begin with binary '10', followed by the next six bits of the code point to make up the rest of the byte.

UTF-8 transformation must be done in the fewest bytes possible, so there is only one valid way to represent any Unicode code point in UTF-8. Editors, input tools for non-ASCII scripts, and other tools that provide UTF-8 support should automatically generate such well-formed UTF-8 encoding.

As an example, take the Greek upper-case letter Omega (Ω) at Unicode code point U+03A9. The Greek alphabet obviously isn't part of the ASCII Latin alphabet, so therefore it must be transformed into a multi-byte sequence for UTF-8 representation.

From the Hexadecimal to Binary table above, we see that:

03A916 = 0000 0011 1010 10012.

Note that just as the subscript "16" denotes a hexadecimal number, the

subscript "2" denotes a binary number.

We can ignore the leading (beginning) zero bits to limit the UTF-8 sequence to the lowest number of bytes required to represent the code point. Thus the binary number we'll convert to UTF-8 is 11 1010 10012. Ignoring any leading zeroes is required to create a well-formed UTF-8 byte sequence.

The last byte will contain binary "10" followed by the lowest six bits of the code point's binary value. The lowest six bits of 11 1010 10012 are 10 10012. If we precede this with the bits 102, the final byte's value will be 1010 10012. From the Hexadecimal to Binary table above, we can convert this binary string into Hexadecimal. The hexadecimal equivalent is A916.

We have used the lowest six bits of the code point in the last UTF-8 byte. Now we have four more bits remaining: 11102. This can fit in a second byte, preceded by 1102. This prefix denotes a multi-byte code point that comprises two bytes (because it begins with two ones, then a zero). Any unfilled middle bits in this top-most byte are set to zero. So the first UTF-8 byte for Omega will be 1100 11102. Again from the Hexadecimal to Binary table above, we see that this is equivalent to CE16.

Therefore the UTF-8 encoding of U+03A9 is the two-byte sequence 0xCE, 0xA9. You'll sometimes see such a Unicode sequence written as <CE AD>. If you see that notation, the numbers are always understood to be in hexadecimal even if they don't contain letters.

Note that in UTF-8 encoding, if the upper bit of a byte is a zero, then that byte must contain an ordinary seven-bit ASCII character. Thus UTF-8 is perfectly backwards-compatible with ASCII.

If the upper two bits in a byte are "10", then the byte must be part of a multi-byte UTF-8 sequence, but not the first byte. If the upper two bits begin with "11", then the byte must be the first byte of a multi-byte UTF-8 code point sequence. This byproduct allows rapid string searching. The ability to perform string searching quickly even if jumping into the middle of a multi-byte character sequence was one of Ken Thompson's design goals for what has become UTF-8 encoding. A full discussion of efficient string searching methods with UTF-8 is beyond the scope of this introductory tutorial.

UTF-8 transformation has one other restriction: it must not directly transform surrogate pairs. Such pairs should be converted into their full 21-bit representation (for example, using UTF-32 as an intermediate format) and then converted to UTF-8.

To specify that you'll be using UTF-8 values in an HTML document, of your HTML document, inlcude the following Meta tag in the <head> section:

<META http-equiv="Content-Type" content="text/html; charset=UTF-8">

|

Tables for Your Troubles

Congratulations! You now know just about all you need to know in order

to make your way through the Unicode standard.

As Julius Cæsar said,

"Post prœlium, præmium" — "After the battle, the reward."

There's more to Unicode and to Unicode encoding, but you now have a

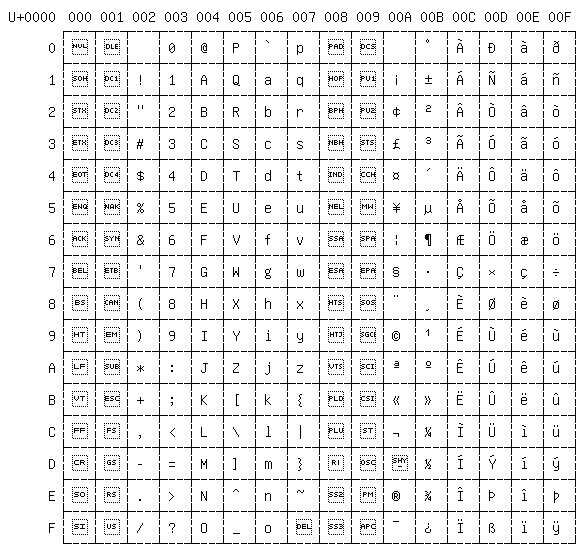

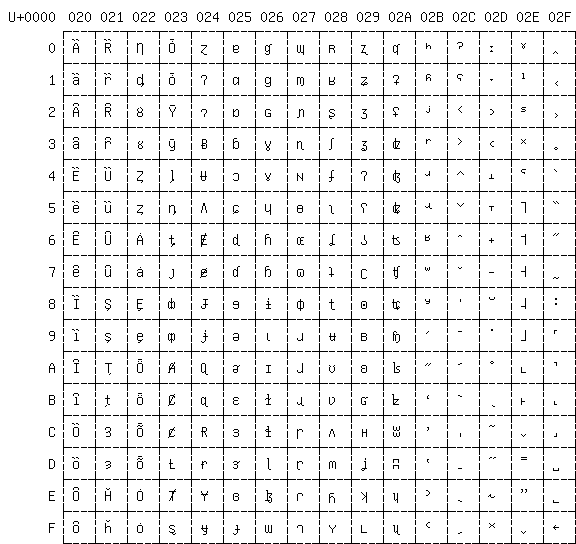

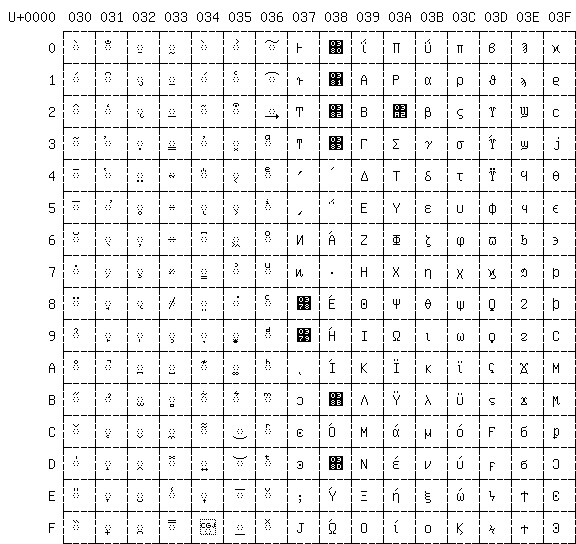

basic foundation. Below are handy charts of the first four groups of

blocks of 256 code points in Unicode, courtesy of the GNU Unifont and

the unihex2bmp utility.

Looking at the first table below, you can see that the ligature "æ"

has Unicode value U+00E6. You can write "æ" in your

HTML source to create this character. The character "&" begins a

special HTML character, "#" signifies that the character is specified

as a number rather than a name, the "x" denotes that the number will be

in hexadecimal, and the special sequence ends with a ";" character.

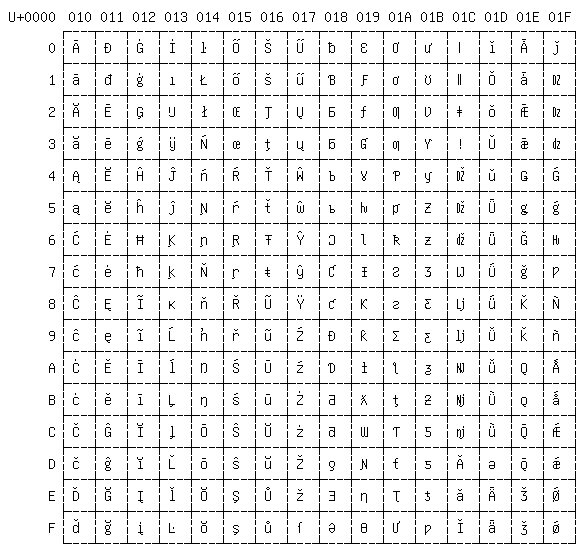

The ligature "œ" has Unicode value U+0153, so it appears in the

second chart below, which has the range U+0100 through U+01FF.

[Interestingly, this basic Latin dipthong, which is also used in writing

French words such as œvre, didn't make it into the Latin-1

ISO standard.] You can write "œ" in an HTML

document to create this character.

Note that the upper left-hand corner of these charts shows the Unicode

plane. If this is ever greater than "U+0010", there is a bug in the

unihex2bmp utility that created the bitmap.

If displaying the Basic Multilingual Plane, the value in the upper

left-hand corner of these charts should always be "U+0000" —

if not, something went wrong with the program.

The full eight hexadecimal digits of a UTF-32 Unicode code point are represented in these charts as a final cross-check that the software handled everything correctly. For example, the full eight hexadecimal digit UTF-32 representation of capital letter "A" is "U+0000 0041".

There is one special recurring symbol in many glyphs: a dashed circle. This appears in combining characters. Combining characters contain a glyph on the side or through this dashed circle, signifying where the glyph should be placed with a preceding character. For example, the intention is that the character "a" followed by the umlaut combining character U+0308 will be combined into a single "ä" character for display. The umlaut glyph appears above the dashed circle, and so should be placed above the preceding character glyph.

Okay, enough talk...let the charts begin!